human ingenuity data intelligence

human

ingenuity

data

intelligence

dataLearning partners with businesses to ensure their full data potential.

dataLearning partners with businesses to ensure their full data potential.

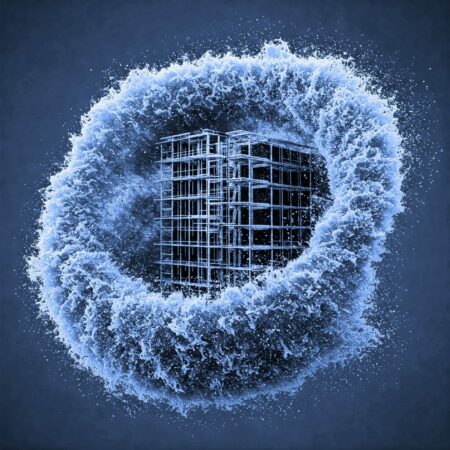

Developed by dataLearning in partnership with AIRC.digital, CloudForma streamlines one of the most time-consuming steps in digital construction workflows : From raw scans (E57) to structured BIM (IFC) — automatically.

We’re currently:

🔹 Looking for early users to test and give feedback

🔹 Seeking investors to accelerate development and scale the platform

Our experts in analytics, technology and business will unlock your data value to reach your full performance in the digital era.

For tackling your data-driven projects, we design human-centre solutions and build increasingly ambitious digital platforms.

We leverage advanced analytics, business intelligence, and technology.

Seamlessly guiding data projects from conception to production.

Development of algorithms for predicting dementia from a medical database.

Design a data architecture and governance with over 200 business users.

On-site experts work hand in hand with you team to deliver efficiently new projects while installing a data culture.

Enable business experts to easily understand and take decisions on disparate data from multiple sources.

Build a digital platform to scale a proprietary operation. Increase productivity by 700% with a complete auditability.

Upskill your team. Over 450 professionals trained on data analysis and data visualisation.

Our approach targets speed, value creation and user outcome.

Together we design a solution tailored to your needs, focusing on empathy, collaboration, ideation, prototyping and testing.

We carry out your projects in several short cycles, or 'sprints', for a continuous improvement towards your objectives.